Langfuse란 무엇인가요? Langfuse는 팀이 API 호출을 추적하고, 성능을 모니터링하며, AI 애플리케이션의 문제를 디버깅할 수 있도록 돕는 오픈 소스 LLM 엔지니어링 플랫폼입니다.

LangChain Tracing

Langfuse Tracing은 LangChain Callbacks(Python, JS)를 사용하여 LangChain과 통합됩니다. 이를 통해 Langfuse SDK는 LangChain 애플리케이션의 모든 실행에 대해 자동으로 중첩된 trace를 생성합니다. 이를 통해 LangChain 애플리케이션을 로깅하고, 분석하고, 디버깅할 수 있습니다. (1) constructor 인자 또는 (2) 환경 변수를 통해 통합을 구성할 수 있습니다. cloud.langfuse.com에 가입하거나 Langfuse를 자체 호스팅하여 Langfuse 자격 증명을 받으세요.Constructor 인자

환경 변수

LangGraph Tracing

이 부분에서는 Langfuse가 LangChain 통합을 사용하여 LangGraph 애플리케이션을 디버깅하고, 분석하고, 반복하는 데 어떻게 도움이 되는지 보여줍니다.Langfuse 초기화

참고: 최소한 Python 3.11을 실행해야 합니다(GitHub Issue). Langfuse UI의 프로젝트 설정에서 API keys를 사용하여 Langfuse 클라이언트를 초기화하고 환경에 추가하세요.LangGraph를 사용한 간단한 채팅 앱

이 섹션에서 수행할 작업:- 일반적인 질문에 답변할 수 있는 지원 챗봇을 LangGraph로 구축

- Langfuse를 사용하여 챗봇의 입력 및 출력 추적

Agent 생성

StateGraph를 생성하는 것으로 시작합니다. StateGraph 객체는 챗봇의 구조를 상태 머신으로 정의합니다. LLM과 챗봇이 호출할 수 있는 함수를 나타내는 node를 추가하고, 봇이 이러한 함수 간에 전환하는 방법을 지정하는 edge를 추가합니다.

호출에 Langfuse를 callback으로 추가

이제 애플리케이션의 단계를 추적하기 위해 LangChain용 Langfuse callback handler를 추가합니다:config={"callbacks": [langfuse_handler]}

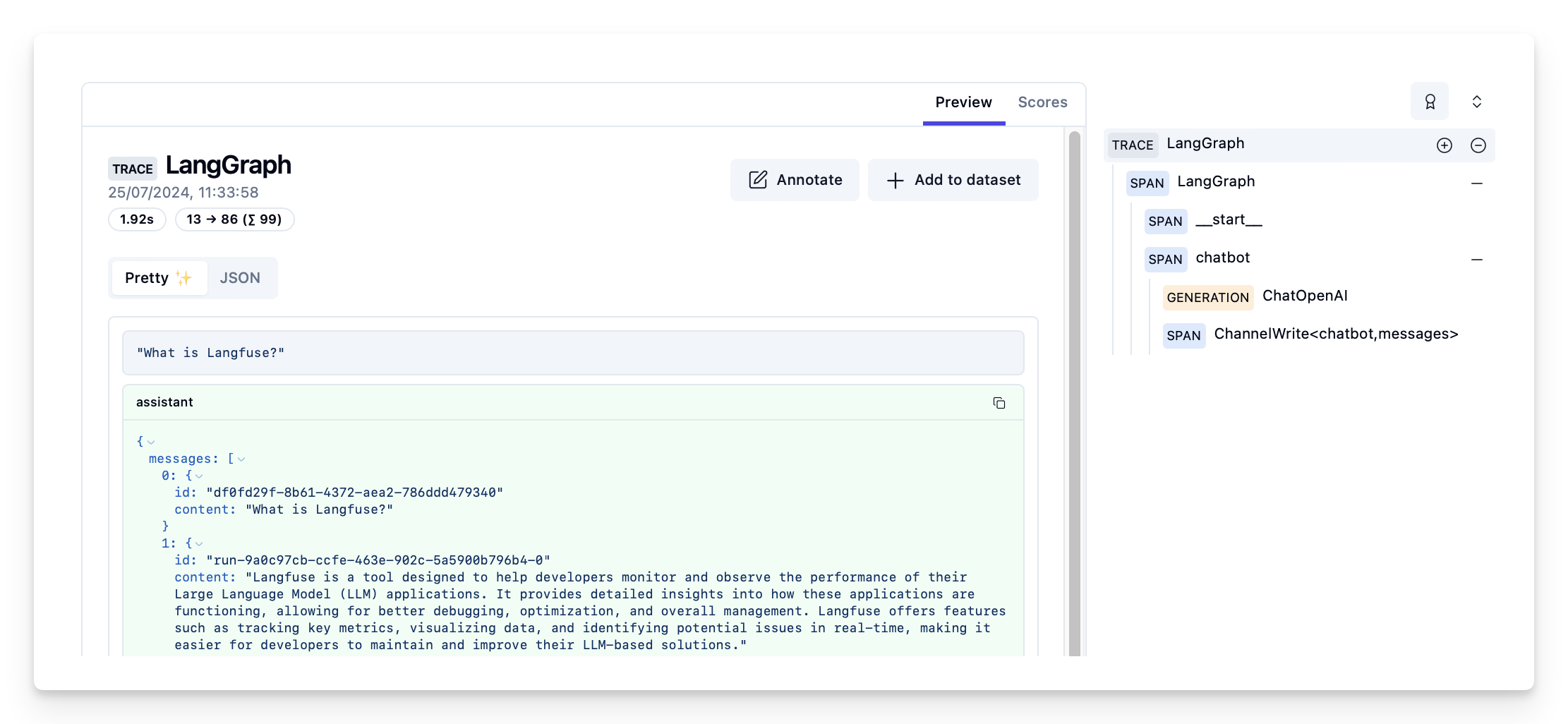

Langfuse에서 trace 보기

Langfuse의 예제 trace: https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/d109e148-d188-4d6e-823f-aac0864afbab

- 더 많은 예제를 보려면 전체 notebook을 확인하세요.

- LangGraph 애플리케이션의 성능을 평가하는 방법을 알아보려면 LangGraph 평가 가이드를 확인하세요.

Connect these docs programmatically to Claude, VSCode, and more via MCP for real-time answers.