Argilla는 LLM을 위한 오픈소스 데이터 큐레이션 플랫폼입니다. Argilla를 사용하면 누구나 인간과 기계의 피드백을 모두 활용하여 더 빠른 데이터 큐레이션을 통해 강력한 언어 모델을 구축할 수 있습니다. 데이터 라벨링부터 모델 모니터링까지 MLOps 사이클의 각 단계를 지원합니다.

ArgillaCallbackHandler를 사용하여 LLM의 입력과 응답을 추적하고 Argilla에서 데이터셋을 생성하는 방법을 보여드립니다.

향후 fine-tuning을 위한 데이터셋을 생성하기 위해 LLM의 입력과 출력을 추적하는 것은 유용합니다. 이는 특히 질문 답변, 요약 또는 번역과 같은 특정 작업을 위한 데이터를 생성하기 위해 LLM을 사용할 때 특히 유용합니다.

Installation and Setup

Getting API Credentials

Argilla API credentials를 얻으려면 다음 단계를 따르세요:- Argilla UI로 이동합니다.

- 프로필 사진을 클릭하고 “My settings”로 이동합니다.

- 그런 다음 API Key를 복사합니다.

Setup Argilla

ArgillaCallbackHandler를 사용하려면 LLM 실험을 추적하기 위해 Argilla에 새로운 FeedbackDataset을 생성해야 합니다. 이를 위해 다음 코드를 사용하세요:

📌 참고: 현재FeedbackDataset.fields로는 prompt-response 쌍만 지원되므로,ArgillaCallbackHandler는 prompt(즉, LLM 입력)와 response(즉, LLM 출력)만 추적합니다.

Tracking

ArgillaCallbackHandler를 사용하려면 다음 코드를 사용하거나 다음 섹션에 제시된 예제 중 하나를 재현하면 됩니다.

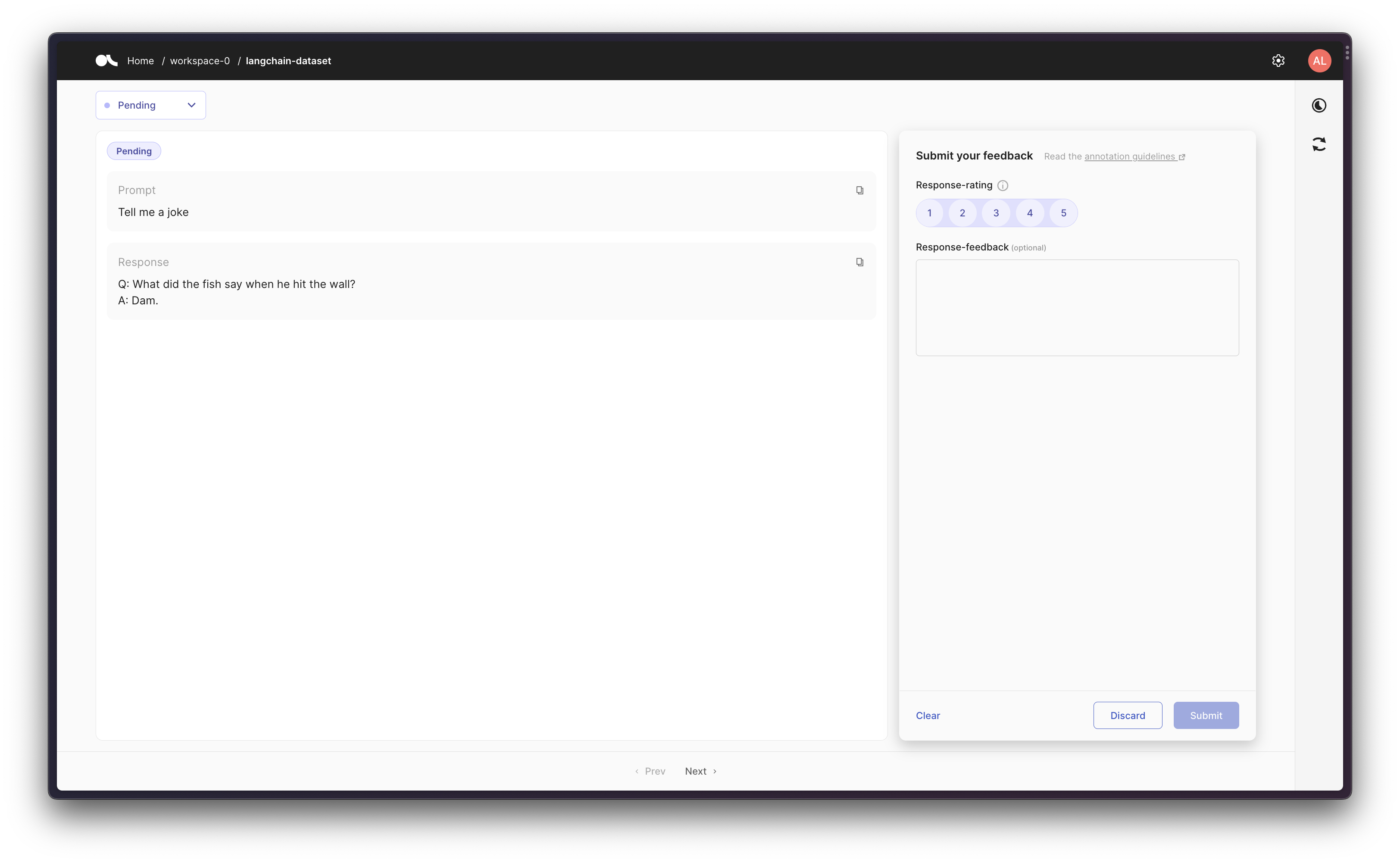

Scenario 1: Tracking an LLM

먼저, 단일 LLM을 몇 번 실행하고 결과 prompt-response 쌍을 Argilla에 캡처해 보겠습니다.

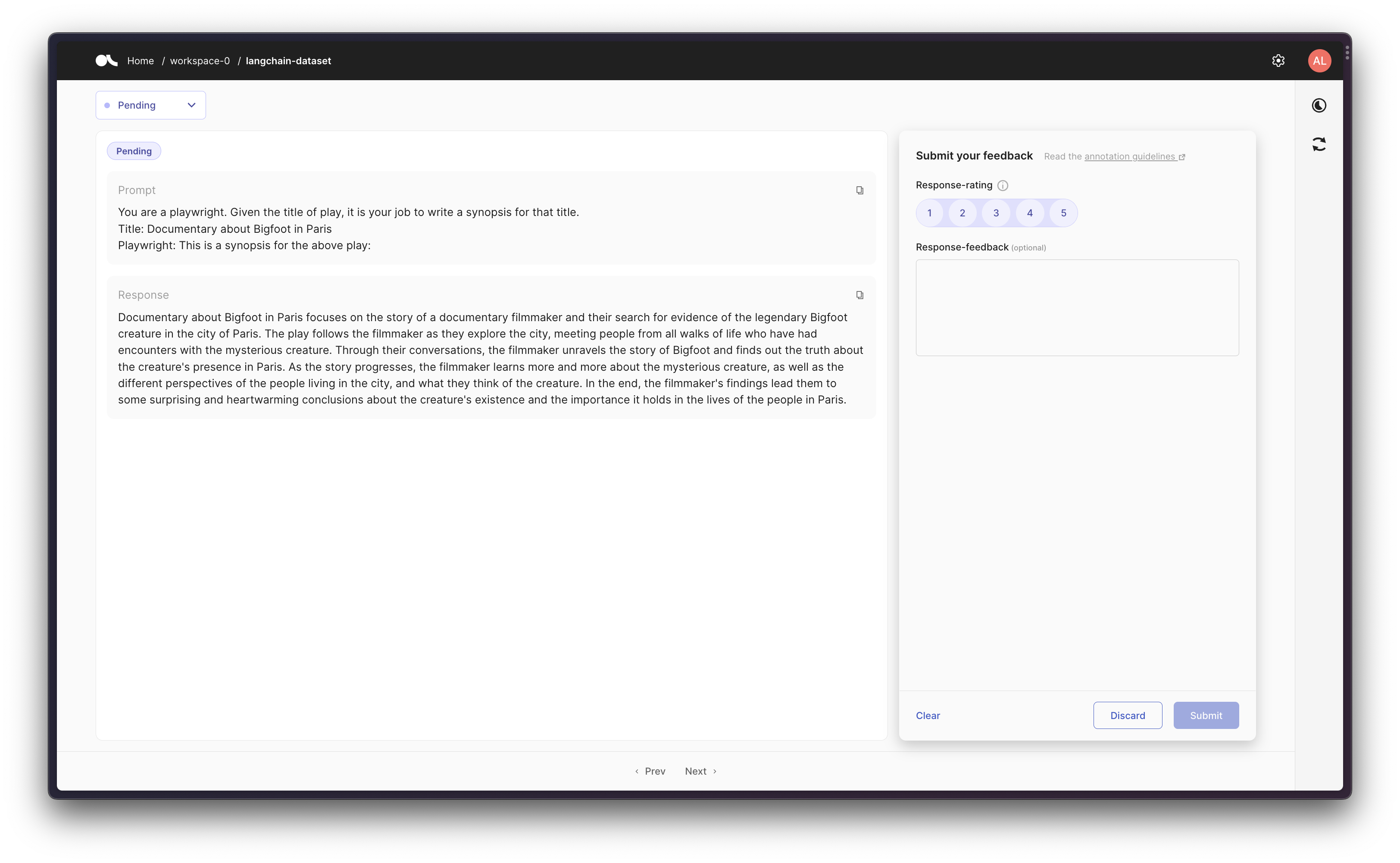

Scenario 2: Tracking an LLM in a chain

그런 다음 prompt template을 사용하여 chain을 생성하고, 초기 prompt와 최종 response를 Argilla에서 추적할 수 있습니다.

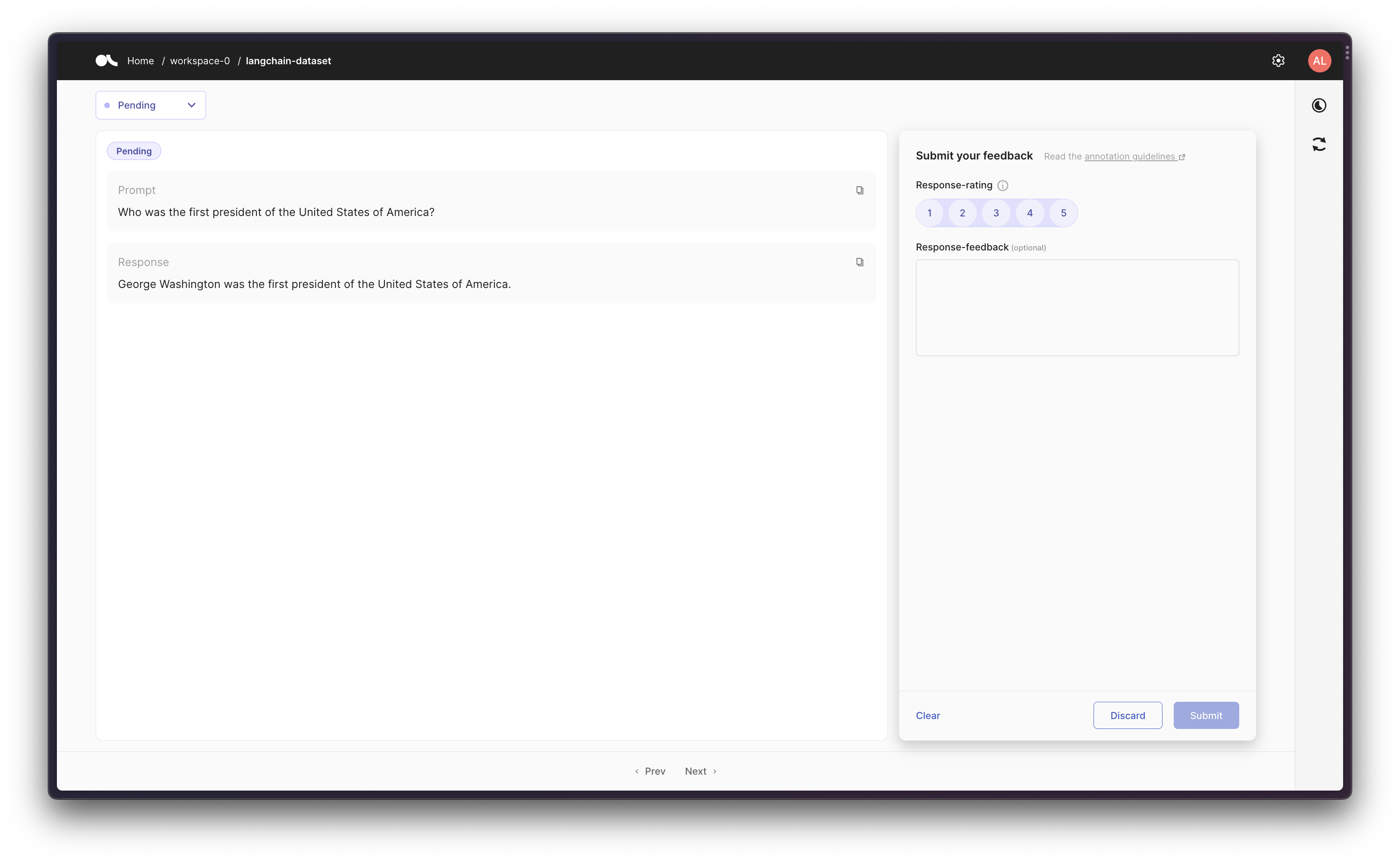

Scenario 3: Using an Agent with Tools

마지막으로, 더 고급 워크플로우로서 일부 tool을 사용하는 agent를 생성할 수 있습니다.ArgillaCallbackHandler는 입력과 출력을 추적하지만 중간 단계/생각은 추적하지 않으므로, 주어진 prompt에 대해 원래 prompt와 해당 prompt에 대한 최종 response를 로깅합니다.

이 시나리오에서는 Google Search API(Serp API)를 사용하므로pip install google-search-results로google-search-results를 설치하고, Serp API Key를os.environ["SERPAPI_API_KEY"] = "..."로 설정해야 합니다(serpapi.com/dashboard에서 찾을 수 있습니다). 그렇지 않으면 아래 예제가 작동하지 않습니다.

Connect these docs programmatically to Claude, VSCode, and more via MCP for real-time answers.